FLAREr example

flare-example-vignette.RmdBackground

This document serves as a users guide and a tutorial for the FLARE (Forecasting Lake and Reservoir Ecosystems) system (Thomas et al. 2020). FLARE generates forecasts and forecast uncertainty of water temperature and water quality for 1 to 35-dau ahead time horizon at multiple depths of a lake or reservoir. It uses data assimilation to update the initial starting point for a forecast and the model parameters based a real-time statistical comparisons to observations. It has been developed, tested, and evaluated for Falling Creek Reservoir in Vinton,VA (Thomas et al. 2020) and National Ecological Observatory Network lakes (Thomas et al. 2023

FLARE is a set of R scripts that

- Generating the inputs and configuration files required by the General Lake Model (GLM)

- Applying data assimilation to GLM

- Processing and archiving forecast output

- Visualizing forecast output

FLARE uses the 1-D General Lake Model (Hipsey et al. 2019) as the mechanistic process model that predicts hydrodynamics of the lake or reservoir. For forecasts of water quality, it uses GLM with the Aquatic Ecosystem Dynamics library. The binaries for GLM and GLM-AED are included in the FLARE code that is available on GitHub. FLARE requires GLM version 3.3 or higher.

More information about the GLM can be found here:

FLARE development has been supported by grants from National Science Foundation (CNS-1737424, DEB-1753639, EF-1702506, DBI-1933016, DEB-1926050)

Requirements

- RStudio

-

FLARErR package -

FLARErdependencies

1: Set up

First, install the FLAREr package from GitHub. There

will be other required packages that will also be downloaded.

remotes::install_github("flare-forecast/FLAREr")Second, download the General Lake Model (GLM) code. You can get in using multiple pathways

The easiest way is to install the GLM3r package from

Github using

remotes::install_github("rqthomas/GLM3r")

#> Using github PAT from envvar GITHUB_PAT. Use `gitcreds::gitcreds_set()` and unset GITHUB_PAT in .Renviron (or elsewhere) if you want to use the more secure git credential store instead.

#> Skipping install of 'GLM3r' from a github remote, the SHA1 (977b2236) has not changed since last install.

#> Use `force = TRUE` to force installationAfter installing GLM3r you need to set an environment variable that points to the GLM code.

Sys.setenv('GLM_PATH'='GLM3r')Third, create a directory that will be your working directory for

your FLARE run. To find this directory on your computer you can use

print(lake_directory)

lake_directory <- normalizePath(tempdir(), winslash = "/")

dir.create(file.path(lake_directory, "configuration/default"), recursive = TRUE)

dir.create(file.path(lake_directory, "targets")) # For QAQC data

dir.create(file.path(lake_directory, "drivers")) # Weather and inflow forecasts2: Configuration files

First, FLAREr requires two configuration yaml files. The

code below copies examples from the FLAREr package.

file.copy(system.file("extdata", "configuration", "default", "configure_flare.yml", package = "FLAREr"), file.path(lake_directory, "configuration", "default", "configure_flare.yml"))

#> [1] TRUE

file.copy(system.file("extdata", "configuration", "default", "configure_run.yml", package = "FLAREr"), file.path(lake_directory, "configuration", "default", "configure_run.yml"))

#> [1] TRUESecond, FLAREr requires a set of configuration CSV

files. The CSV files are used to define the states that are simulated

and the parameters that are calibrated. The code below copies examples

from the FLAREr package

file.copy(system.file("extdata", "configuration", "default", "parameter_calibration_config.csv", package = "FLAREr"), file.path(lake_directory, "configuration", "default", "parameter_calibration_config.csv"))

#> [1] TRUE

file.copy(system.file("extdata", "configuration", "default", "states_config.csv", package = "FLAREr"), file.path(lake_directory, "configuration", "default", "states_config.csv"))

#> [1] TRUE

file.copy(system.file("extdata", "configuration", "default", "depth_model_sd.csv", package = "FLAREr"), file.path(lake_directory, "configuration", "default", "depth_model_sd.csv"))

#> [1] TRUE

file.copy(system.file("extdata", "configuration", "default", "observations_config.csv", package = "FLAREr"), file.path(lake_directory, "configuration", "default", "observations_config.csv"))

#> [1] TRUEThird, FLAREr requires GLM specific configurations files. For applications that require on water temperature, only the GLM namelist file is needed. Applications that require other water quality variables will require additional namelist files that are associated with the aed model.

file.copy(system.file("extdata", "configuration", "default", "glm3.nml", package = "FLAREr"), file.path(lake_directory, "configuration", "default", "glm3.nml"))

#> [1] TRUE3: Observation and driver files

Since the FLAREr package for general application, scripts to download and process observation and drivers are not included in the package. Therefore the application of FLARE to a lake will require a set of additional scripts that are specific to the data formats for the lakes. The example includes files for application to FCR.

file.copy(from = system.file("extdata/targets", package = "FLAREr"), to = lake_directory, recursive = TRUE)

#> [1] TRUE

file.copy(from = system.file("extdata/drivers", package = "FLAREr"), to = lake_directory, recursive = TRUE)

#> [1] TRUEFirst, FLAREr requires the observation file to have a specific name (observations_postQAQC_long.csv) and format.

head(read_csv(file.path(lake_directory,"targets/fcre/fcre-targets-insitu.csv"), show_col_types = FALSE))

#> # A tibble: 6 × 5

#> datetime site_id depth observation variable

#> <dttm> <chr> <dbl> <dbl> <chr>

#> 1 2022-09-28 00:00:00 fcre 0 20.5 temperature

#> 2 2022-09-29 00:00:00 fcre 0 19.6 temperature

#> 3 2022-09-30 00:00:00 fcre 0 18.8 temperature

#> 4 2022-10-01 00:00:00 fcre 0 17.2 temperature

#> 5 2022-10-02 00:00:00 fcre 0 16.0 temperature

#> 6 2022-10-03 00:00:00 fcre 0 15.4 temperature2: Configure simulation (GLM)

The configuration functions are spread across the files. These files are described in more detail below

glm3.nmlconfigure_flare.ymlconfigure_run.ymlstates_config.csvobservations_config.csvparameter_calibration_config.csvdepth_model_sd.csv

configure_run.yml

This file is the configuration file that define the specific timing of the run.

-

restart_file: This is the full path to the file that you want to use as initial conditions for the simulation. You will set this toNAif the simulation is not a continuation of a previous simulation. -

sim_name: a string with the name of your simulation. This will appear in your output file names -

forecast_days: This is your forecast horizon. The max is16days. Set to0if only doing data assimilation with observed drivers. -

start_datetime: The date time of day you want to start a forecast. Because GLM is a daily timestep model, the simulation will start at this time. It usesYYYY-MM-DD mm:hh:ssformat and must only be a whole hour. It is in the UTC time. It can be any hour if only doing data assimilation with observed drivers (forecast_days = 0). If forecasting (forecast_days > 0) it is required to match up with the availability of a NOAA forecast. NOAA forecasts are available at the following times UTC so you must select a local time that matches one of these times (i.e., 07:00:00 at FCR is the 12:00:00 UTC NOAA forecast).- 00:00:00 UTC

- 06:00:00 UTC

- 12:00:00 UTC

- 18:00:00 UTC

-

forecast_start_datetime: The date that you want forecasting to start in your simulation. Uses the YYYY-MM-DD mm:hh:ss format (e.g., “2019-09-20 00:00:00”). The difference betweenstart_timeandforecast_start_datetimedetermines how many days of data assimilation occur using observed drivers before handing off to forecasted drivers and not assimilating data -

configure_flare: name of FLARE configuration file located in yourconfiguration/[config_set]directory (configure_flare.yml) -

configure_obs: name of optional observation processing configuration file located in yourconfiguration/[config_set]directory (configure_obs.yml) -

use_s3: use s3 cloud storage for saving forecast, scores, and restart files.

glm3.nml

glm3.nml is the configuration file that is required by

GLM. It can be configured to run only GLM or GLM + AED. This version is

already configured to run only GLM for FCR and you do not need to modify

it for the example simulation.

3: Run your GLM example simulation

Read configuration files

The following reads in the configuration files and overwrites the directory locations based on the lake_directory and directories provided above. In practice you will specific these directories in the configure file and not overwrite them.

next_restart <- FLAREr::run_flare(lake_directory = lake_directory,configure_run_file = "configure_run.yml", config_set_name = "default")

#> Warning in set_up_simulation(configure_run_file, lake_directory, clean_start =

#> clean_start, : NAs introduced by coercion

#> Warning in set_up_simulation(configure_run_file, lake_directory, clean_start =

#> clean_start, : NAs introduced by coercion

#> Warning in set_up_simulation(configure_run_file, lake_directory, clean_start =

#> clean_start, : NAs introduced by coercion

#> Warning in set_up_simulation(configure_run_file, lake_directory, clean_start =

#> clean_start, : NAs introduced by coercion

#> Warning in set_up_simulation(configure_run_file, lake_directory, clean_start =

#> clean_start, : NAs introduced by coercion

#> Running forecast that starts on: 2022-09-28 00:00:00

#> Retrieving Observational Data...

#> Generating Met Forecasts...

#> Registered S3 method overwritten by 'tsibble':

#> method from

#> as_tibble.grouped_df dplyr

#> Registered S3 method overwritten by 'quantmod':

#> method from

#> as.zoo.data.frame zoo

#> Creating inflow/outflow files...

#> Setting states and initial conditions...

#> Running time step 1/20 : 2022-09-28 00:00 - 2022-09-29 00:00 [2025-08-21 18:59:42.885747]

#> performing data assimilation

#> zone1temp: mean 11.1375 sd 1.0221

#> zone2temp: mean 14.1642 sd 1.2743

#> lw_factor: mean 0.9721 sd 0.0525

#> Running time step 2/20 : 2022-09-29 00:00 - 2022-09-30 00:00 [2025-08-21 18:59:48.347103]

#> performing data assimilation

#> zone1temp: mean 11.4405 sd 1.0471

#> zone2temp: mean 14.1361 sd 1.2831

#> lw_factor: mean 0.9866 sd 0.0465

#> Running time step 3/20 : 2022-09-30 00:00 - 2022-10-01 00:00 [2025-08-21 18:59:50.291485]

#> performing data assimilation

#> zone1temp: mean 11.832 sd 0.9602

#> zone2temp: mean 14.7372 sd 1.4802

#> lw_factor: mean 0.8995 sd 0.0423

#> Running time step 4/20 : 2022-10-01 00:00 - 2022-10-02 00:00 [2025-08-21 18:59:52.273699]

#> performing data assimilation

#> zone1temp: mean 11.6925 sd 1.3402

#> zone2temp: mean 14.6477 sd 2.1824

#> lw_factor: mean 0.9234 sd 0.0415

#> Running time step 5/20 : 2022-10-02 00:00 - 2022-10-03 00:00 [2025-08-21 18:59:54.261146]

#> zone1temp: mean 11.7124 sd 1.5978

#> zone2temp: mean 14.8037 sd 2.4642

#> lw_factor: mean 0.9187 sd 0.0493

#> Running time step 6/20 : 2022-10-03 00:00 - 2022-10-04 00:00 [2025-08-21 18:59:56.263757]

#> zone1temp: mean 11.8303 sd 1.9282

#> zone2temp: mean 14.9288 sd 2.8023

#> lw_factor: mean 0.9168 sd 0.0466

#> Running time step 7/20 : 2022-10-04 00:00 - 2022-10-05 00:00 [2025-08-21 18:59:58.314797]

#> zone1temp: mean 11.7116 sd 2.0631

#> zone2temp: mean 14.8918 sd 3.1777

#> lw_factor: mean 0.914 sd 0.0552

#> Running time step 8/20 : 2022-10-05 00:00 - 2022-10-06 00:00 [2025-08-21 19:00:00.353961]

#> zone1temp: mean 11.7555 sd 2.3384

#> zone2temp: mean 14.8048 sd 3.0798

#> lw_factor: mean 0.9177 sd 0.0615

#> Running time step 9/20 : 2022-10-06 00:00 - 2022-10-07 00:00 [2025-08-21 19:00:02.241181]

#> zone1temp: mean 11.8282 sd 2.5837

#> zone2temp: mean 14.7285 sd 3.0437

#> lw_factor: mean 0.9195 sd 0.0647

#> Running time step 10/20 : 2022-10-07 00:00 - 2022-10-08 00:00 [2025-08-21 19:00:04.095983]

#> zone1temp: mean 11.6947 sd 2.846

#> zone2temp: mean 14.6585 sd 3.3599

#> lw_factor: mean 0.9197 sd 0.0679

#> Running time step 11/20 : 2022-10-08 00:00 - 2022-10-09 00:00 [2025-08-21 19:00:05.974499]

#> zone1temp: mean 11.6428 sd 3.4917

#> zone2temp: mean 14.6185 sd 3.3229

#> lw_factor: mean 0.9209 sd 0.0669

#> Running time step 12/20 : 2022-10-09 00:00 - 2022-10-10 00:00 [2025-08-21 19:00:07.827773]

#> zone1temp: mean 11.5888 sd 3.5861

#> zone2temp: mean 14.8978 sd 3.3326

#> lw_factor: mean 0.9221 sd 0.0712

#> Running time step 13/20 : 2022-10-10 00:00 - 2022-10-11 00:00 [2025-08-21 19:00:09.709956]

#> zone1temp: mean 11.4508 sd 3.4511

#> zone2temp: mean 14.8334 sd 3.241

#> lw_factor: mean 0.9266 sd 0.0725

#> Running time step 14/20 : 2022-10-11 00:00 - 2022-10-12 00:00 [2025-08-21 19:00:11.588118]

#> zone1temp: mean 11.7084 sd 3.503

#> zone2temp: mean 14.894 sd 3.5116

#> lw_factor: mean 0.926 sd 0.0761

#> Running time step 15/20 : 2022-10-12 00:00 - 2022-10-13 00:00 [2025-08-21 19:00:13.512618]

#> zone1temp: mean 11.9343 sd 3.5267

#> zone2temp: mean 15.0102 sd 3.5515

#> lw_factor: mean 0.9259 sd 0.0774

#> Running time step 16/20 : 2022-10-13 00:00 - 2022-10-14 00:00 [2025-08-21 19:00:15.436418]

#> zone1temp: mean 11.9746 sd 3.3373

#> zone2temp: mean 14.7436 sd 3.7195

#> lw_factor: mean 0.9244 sd 0.079

#> Running time step 17/20 : 2022-10-14 00:00 - 2022-10-15 00:00 [2025-08-21 19:00:17.392425]

#> zone1temp: mean 12.192 sd 3.4602

#> zone2temp: mean 14.7866 sd 3.6314

#> lw_factor: mean 0.9201 sd 0.0823

#> Running time step 18/20 : 2022-10-15 00:00 - 2022-10-16 00:00 [2025-08-21 19:00:19.344236]

#> zone1temp: mean 12.06 sd 3.6724

#> zone2temp: mean 14.6612 sd 3.684

#> lw_factor: mean 0.9251 sd 0.0857

#> Running time step 19/20 : 2022-10-16 00:00 - 2022-10-17 00:00 [2025-08-21 19:00:21.309771]

#> zone1temp: mean 11.9983 sd 3.558

#> zone2temp: mean 14.6992 sd 3.5753

#> lw_factor: mean 0.9187 sd 0.0861

#> Running time step 20/20 : 2022-10-17 00:00 - 2022-10-18 00:00 [2025-08-21 19:00:23.248761]

#> zone1temp: mean 12.042 sd 3.9072

#> zone2temp: mean 14.7814 sd 3.9474

#> lw_factor: mean 0.9149 sd 0.0775

#> Writing restart

#> Writing forecast

#> starting writing dataset

#> ending writing dataset

#> successfully generated flare forecats for: fcre-2022-10-02-testVisualizing output

df <- arrow::open_dataset(file.path(lake_directory,"forecasts/parquet")) |> collect()

head(df)

#> # A tibble: 6 × 14

#> reference_datetime datetime pub_datetime depth family

#> <dttm> <dttm> <dttm> <dbl> <chr>

#> 1 2022-10-02 00:00:00 2022-09-28 00:00:00 2025-08-21 19:00:25 0 ensemble

#> 2 2022-10-02 00:00:00 2022-09-28 00:00:00 2025-08-21 19:00:25 0 ensemble

#> 3 2022-10-02 00:00:00 2022-09-28 00:00:00 2025-08-21 19:00:25 0 ensemble

#> 4 2022-10-02 00:00:00 2022-09-28 00:00:00 2025-08-21 19:00:25 0 ensemble

#> 5 2022-10-02 00:00:00 2022-09-28 00:00:00 2025-08-21 19:00:25 0 ensemble

#> 6 2022-10-02 00:00:00 2022-09-28 00:00:00 2025-08-21 19:00:25 0 ensemble

#> # ℹ 9 more variables: parameter <int>, variable <chr>, prediction <dbl>,

#> # forecast <dbl>, variable_type <chr>, log_weight <dbl>, site_id <chr>,

#> # model_id <chr>, reference_date <chr>

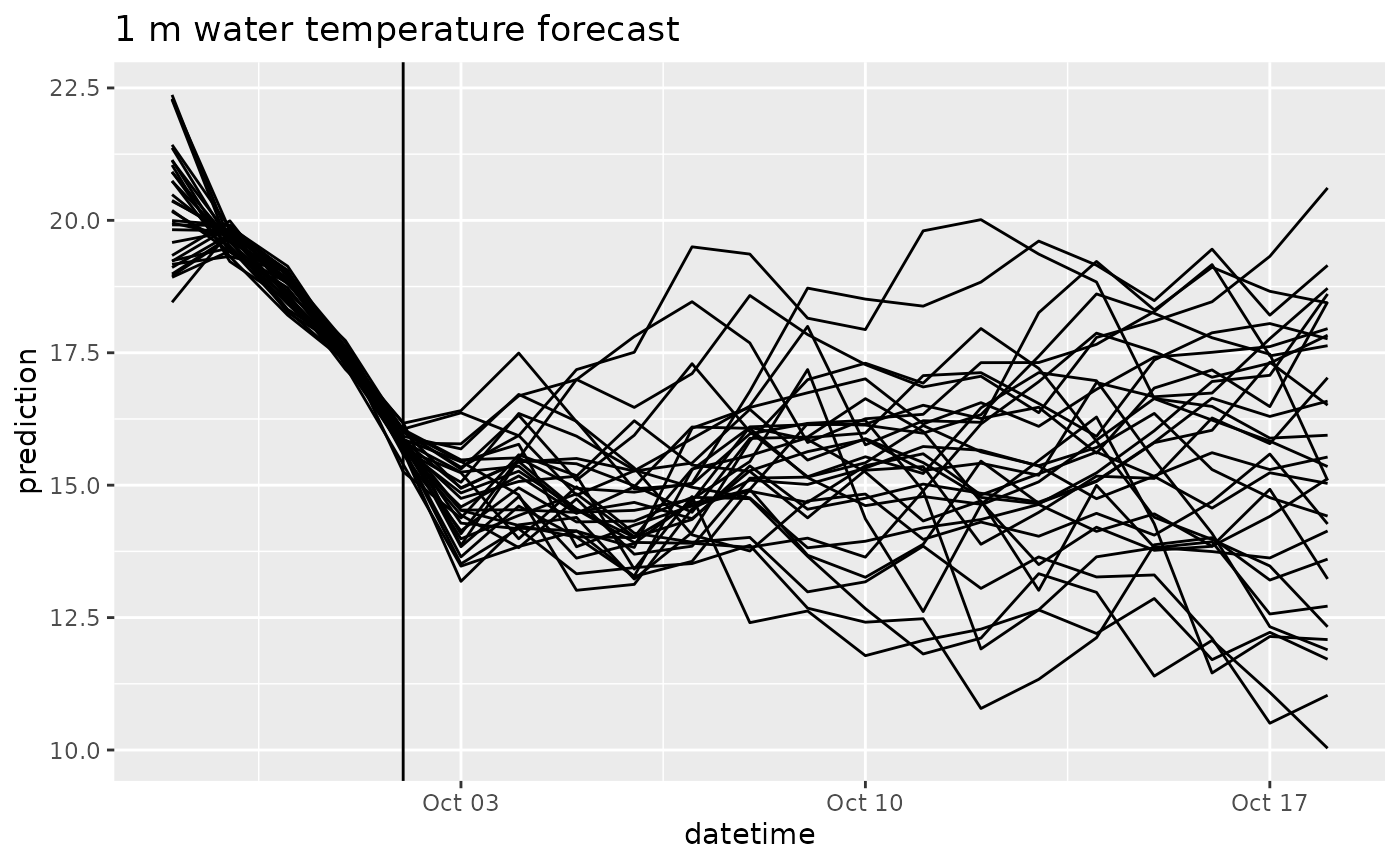

df |>

filter(variable == "temperature",

depth == 1) |>

ggplot(aes(x = datetime, y = prediction, group = parameter)) +

geom_line() +

geom_vline(aes(xintercept = as_datetime(reference_datetime))) +

labs(title = "1 m water temperature forecast")

targets_df <- read_csv(file.path(lake_directory, "targets/fcre/fcre-targets-insitu.csv"), show_col_types = FALSE)

combined_df <- left_join(df, targets_df, by = join_by(datetime, depth, variable, site_id))

combined_df |>

filter(variable == "temperature",

depth == 1) |>

ggplot(aes(x = datetime, y = prediction, group = parameter)) +

geom_line() +

geom_vline(aes(xintercept = as_datetime(reference_datetime))) +

geom_point(aes(y = observation), color = "red") +

labs(title = "1 m water temperature forecast")

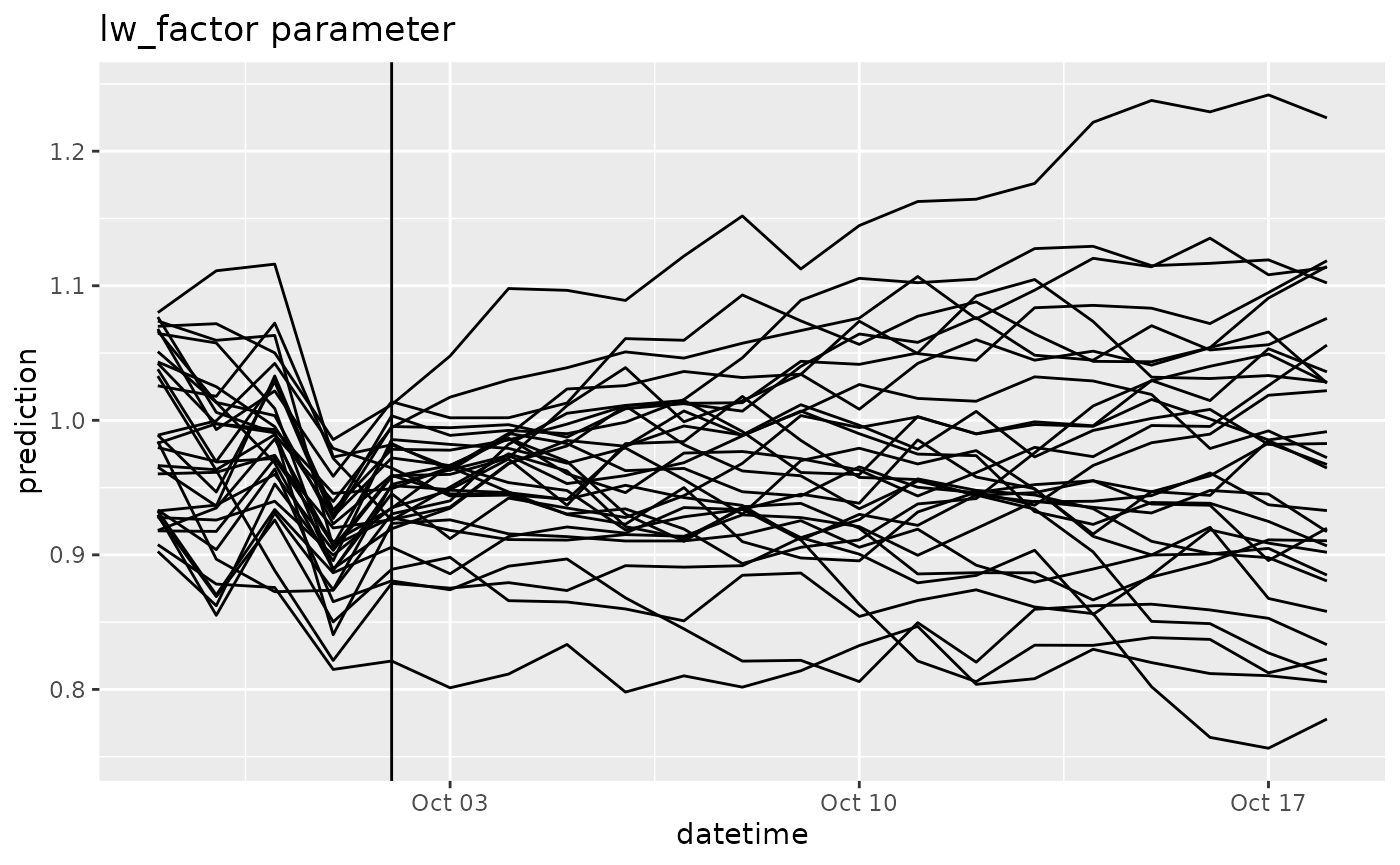

df |>

filter(variable == "lw_factor") |>

ggplot(aes(x = datetime, y = prediction, group = parameter)) +

geom_line() +

geom_vline(aes(xintercept = as_datetime(reference_datetime))) +

labs(title = "lw_factor parameter") ## 5. Comparing to observations

## 5. Comparing to observations